2024: 0.5% of the Global Top 200 Websites Use Valid HTML

Published on Sep 11, 2024, filed under development, html, conformance. (Share this post, e.g. on Mastodon or on Bluesky.)

For the latest analysis, check out the data for 2025.

HTML conformance data for 2024 are in, and the good news first: There’s an increase in the number of valid website home pages, going from 0 to 1.

The flip side: 199 of 200 of the most popular websites use HTML that’s faulty, that doesn’t exist, and/or that doesn’t work.

The usual disclaimer: The following data gives an impression of precision that is greater than warranted. The point of this annual analysis is to check on full HTML conformance—absence of markup errors—on home pages; that is, the specific error counts don’t matter for the purpose of telling conformance or non-conformance, and other pages aren’t being checked. I’m providing the data for further study and for comparability to previous years.

Also, before we begin: Where’s CSS? I took CSS validation out because 1) HTML quality is much more important than CSS quality (cf. The Most Important Thing Is to Get the HTML Right, though it could be clearer), and 2) the W3C CSS validator seems understandably but chronically behind the specifications, with false positives and false negatives (my experience thus far, which carries no judgment).

Contents

Analysis

Like every year since 2021 (cf. 2022, 2023) I used the annual update of the Ahrefs Top 1,000 websites to check the home pages of the first now 200 websites on HTML conformance. *

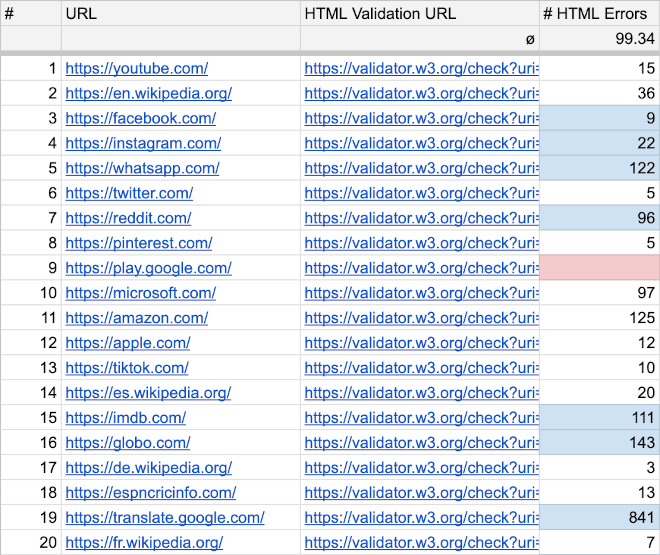

For this purpose I took all the respective URLs, prepared HTML validation URLs, validated the respective pages, and documented the error counts of the 200 tests in a spreadsheet:

HTML Conformance in 2024

“Improved”: 1 of the 200 home pages have 0 HTML conformance errors—a splash screen of the Unique Identification Authority of India.

Improved: 5 home pages (of Adobe, Speedtest, Poki, the NHS, and Gramedia) were super-close to conformance, with 1 error each.

The issues—error messages—on these single-error home pages?

- “An

imgelement must have analtattribute, except under certain conditions.” - “Bad value

truefor attributeasyncon elementscript.” - “No

lielement in scope but aliend tag seen.” - “Bad value

tel: 111for attributehrefon elementa: Illegal character in scheme data: space is not allowed.” - “Element

headis missing a required instance of child elementtitle.”

Improved: 44 more home pages had a single-digit number of issues.

Degraded: 56 were far away from conformance with any specification (100 or more errors).

Improved: The average number of HTML errors is 99.34.

Improved: The HTML errors median is 35.5.

(Improved: The mode is 5.)

HTML Conformance Over Time

We need to test more websites for this to be significant, but after this new analysis, here is how error counts developed over the years:

| 2021 | 2022 | 2023 | 2024 | |

|---|---|---|---|---|

| Average number of HTML errors on home page | 125.22 | 125.63 → | 132.14 ↗ | 99.34 ↘ |

| Home pages without errors | 2% | 0% ↘ | 0% → | 0.5% ↗ |

Notes and Observations

First, the W3C HTML validator (i.e., the “Nu” portion handling living HTML) is too helpful and makes work on fixing and analyzing HTML unnecessarily difficult. If you’re using it, too, you’ll know how it mixes HTML errors with CSS and other errors. This has significantly slowed down the work on this analysis, and introduced a potential error source by requiring to manually deduct non-HTML errors from the error counts. This is a design and usability issue, though, so I hope the team can improve this—for example, by grouping and counting errors by language and topic, or by making this configurable. (Subscribe to and chime in on Nu Validator issue #940 if you like to see this improved, too.)

Many more companies and organizations than ever block the W3C HTML validator. In 2023, 12 of 100 websites couldn’t be validated—in 2024, it was double (relatively speaking), with 48 of 200 websites. I didn’t (and might not have been able to) analyze the nature of the blocking though—that is, it could be intentional (as with geo-blocking), it could be unintentional (perhaps using some overeager edge tooling).

Redirects, interstitials, cookie pages made validation hard as well. In the legend I’m emphasizing how I’m not caring about or highlighting these instances anymore—after all, the analysis checks on whether the most popular websites use valid HTML, and for that purpose, it’s interesting but not decisive how many issues there are, or if a page to be tested doesn’t happen to be the main page.

There was one website in the sample, Fast.com, that used HTML 4.01 Strict! Unfortunately, the document was written following XHTML–HTML, which came with many related conformance issues.

There was also one instance that couldn’t be validated at all. I’ve passed on my observations to the W3C team.

Interpretation

I’m sharing this annual report for your and the community’s interpretation.

Yet here are two cents:

For the new data, there are several improvements (notably a lower average number of HTML errors). That’s better than if we had observed more degradations, but it’s not clear whether we’re dealing with any significant shift. Sustained improvements with more websites using valid HTML would underscore such a shift.

I’m writing a lot about HTML conformance, and I am to write more about it. For those of you who don’t know this part of my work, I think we all benefit from ensuring HTML conformance because it’s a foundational quality attribute that hedges against shipping unnecessary and dysfunctional code and payload to our users, and because it’s the easiest-to-implement quality bar we can employ in our profession. Professional web developers write valid HTML.

If you’re working on a website covered in the analysis—really, if you’re working on any website—, write HTML, and check (validate) that what you ship is valid HTML.

* I do so faithfully, that is, I don’t check and question Ahrefs’ methodology. There had been concerns about some of these top sites in the past—including that a few seemed spammy—, but I’m leaving that issue in Ahrefs’ part of the field. This is not to say that you shouldn’t be critical about any of this.

About Me

I’m Jens (long: Jens Oliver Meiert), and I’m an engineering lead, guerrilla philosopher, and indie publisher. I’ve worked as a technical lead and engineering manager for companies you use every day and companies you’ve never heard of, I’m an occasional contributor to web standards (like HTML, CSS, WCAG), and I write and review books for O’Reilly and Frontend Dogma.

I love trying things, not only in web development and engineering management, but also in philosophy. Here on meiert.com I share some of my experiences and perspectives. (I value you being critical, interpreting charitably, and giving feedback.)